April 2021 newsletter

with links on AI scaling, particular new East Asian record-breaking work & deep reinforcement learning.

April 2021’s Gwern.net newsletter is now out; previous, March 2021 (archives). This is a collation of links and summary of major changes, overlapping with my Changelog; brought to you by my donors on Patreon.

1 Writings

Better Greek Variable Suggestions (use ϰ, ς, υ, ϖ, Υ, Ξ, ι, ϱ, ϑ, or Π instead)

2 Links

2.1 AI

“Set Transformer: A Framework for Attention-based Permutation-Invariant Neural Networks”, Lee et al 2018; “Perceiver: General Perception with Iterative Attention”, Jaegle et al 2021 (skinny Transformers applied recurrently; given reinvention, one might ask “is attention, getting too much attention?”, especially given how many Transformer tweaks don’t pan out or have antecedents, indicating a gold rush? Probably not: if the marginal return on this research direction had fallen below that of competitors, we would see those neglected directions invade Transformer topics—while we continue to see the reverse, and many applications as yet untouched by all the new approaches, suggesting that we still don’t pay enough attention)

“Z-IL: Predictive Coding Can Do Exact Backpropagation on Any Neural Network”, Salvatori et al 2021 (scaling local learning rules to ImageNet AlexNet/Resnet & ALE DRL at similar compute cost)

“Super-Convergence: Very Fast Training of Neural Networks Using Large Learning Rates”, Smith & Topin 2017 (the lingering mystery of super-convergence, saving 50–90% compute with LRs as high as 20 (!): what is it, why does it work only sometimes, is there any connection to grokking & can it work for large models like GPT-3 given the tunneling hypothesis?)

“Rip van Winkle’s Razor, a Simple New Estimate for Adaptive Data Analysis” (an unusual approach to estimating generalization—by quantifying the information-theoretic simplicity of all the powerful DL research discoveries since 2012, into ~1 kilobyte. And yet, what a kilobyte…)

“Ambigrammatic Figures”, Levin & Huang 2020 (making horrifying StyleGAN faces that can be rotated 180° by projection & then gradient-ascent towards an upside-down face)

Congratulations to OpenAI on 1 year of GPT-3 & OA API. Has it really only been a year?—it has truly exceeded expectations.

Naver announces 204b-parameter Korean-language NN, “HyperCLOVA” (KO; unknown arch although apparently dense, or training-compute or benchmark/loss performance; 650b token training dataset. Who knew Naver was even trying? “And we are here as on a darkling plain / Swept with confused alarms of struggle and flight, / Where ignorant armies clash by night.”)

“PanGu-α: Large-scale Autoregressive Pretrained Chinese Language Models with Auto-parallel Computation”, Zeng et al 2021 (Zh; Huawei’s GPT-3-200b prototype, trained on indigenous Chinese GPU+DL stack; a partial replication, due to incomplete training on ~43b tokens; the 13b-parameter model checkpoint has been released for download, and they are considering releasing the 200b-parameter model… Ding commentary)

New 𝒪(100b)-parameter Transformer models announced at Google I/O ’2021: LaMDA (EN; chatbot), MUM (multimodal multilingual search/translation/Q&A)

“PLUG” (Zh): a 27b parameter BERT-like Chinese language model, targeting 200b next (AliBaba followup to StructBERT/PALM)

“CogView: Mastering Text-to-Image Generation via Transformers”, Ding et al 2021 (another Chinese DALL·E clone, post-M6: n = 30m text-image pairs, 4b-parameter GPT, models to be released)

“VideoGPT: Video Generation using VQ-VAE and Transformers”, Yan et al 2021; “GODIVA: Generating Open-DomaIn Videos from nAtural Descriptions”, Wu et al 2021 (DALL·E for video on Howto100M: VQ-VAE + sparse attention)

“Efficient Large-Scale Language Model Training on GPU Clusters”, Narayanan et al 2021 (Nvidia ‘Megatron-LM’ software for scaling up to 3072 A100 GPUs; allows 1t-parameter models at 502 petaFLOP/s or 50% efficiency, cf TPU rival, GSPMD, and note Patterson et al 2021 estimates GPT-3 at ~3.5m V100 GPU-hours, so OA got ~20% efficiency?); “We expect to see multi-trillion-parameter models by next year, and 100 trillion+ parameter models by 2023” —Nvidia CEO Jensen Huang (subtitles)

Mixture-Of-Experts:

BAAI’s “Wudao Wensu”: 1.75-trillion parameters & multimodal! (prologue)

“Exploring Sparse Expert Models and Beyond”, Yang et al 2021 (1t-parameter hierarchical Switch Transformer trained on 480 V100 GPUs)

“MuZero Unplugged: Online and Offline Reinforcement Learning by Planning with a Learned Model”, Schrittwieser et al 2021 (Reanalyze+MuZero; smooth log-scaling of Ms. Pacman reward with sample size, 107–1010, showing that DRL for arcade games parallels board games)

“Decision Transformer: Reinforcement Learning via Sequence Modeling”, Chen et al 2021

“Sampled MuZero: Learning and Planning in Complex Action Spaces”, Hubert et al 2021 (MuZero for continuous domains: DM Control Suite/Real-World RL Suite); “Continuous Control for Searching and Planning with a Learned Model”, Yang et al 2020

“Muesli: Combining Improvements in Policy Optimization”, Hessel et al 2020 (catching up with original MuZero)

“Visualizing MuZero Models”, de Vries et al 2021 (reimplementing & introspecting a MuZero)

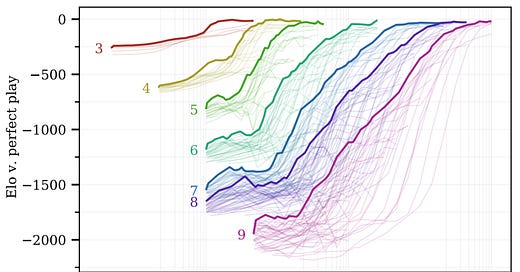

“Scaling Scaling Laws with Board Games”, Jones 2021 (AlphaZero/Hex: highly-optimized GPU implementation enables showing smooth scaling across 6 OOM of compute—2× FLOPS = 66% victory; amortization of training → runtime tree-search, where 10× training = 15× runtime)

“Scaling Laws for Language Transfer Learning”, Christina Kim (Hernandez et al 2021 followup: smooth scaling for En → De/Es/Zh)

“Carbon Emissions and Large Neural Network Training”, Patterson et al 2021 (“…choice of DNN/datacenter/processor can reduce the carbon footprint up to ~100–1000×. These large factors make retroactive estimates difficult.”)

“How to Train BERT with an Academic Budget”, Izsak et al 2021 (BERT in 8 GPU-days—R&D iteration allows finding efficiency; there’s nothing so expensive as demanding research be cheap.^1^)

2.2 Genetics

Everything Is Heritable:

“Precision exercise medicine: understanding exercise response variability”, Ross et al 2019 (“large individual differences in CRF response (range: −33% to +118%) have been observed across the 8 exercise training studies independent of exercise duration”—nothing in psychology, or medicine, makes sense except in the light of individual differences…)

Recent Evolution:

“Analysis of genomic DNA from medieval plague victims suggests long-term effect of Yersinia pestis on human immunity genes”, Immel et al 2021

Engineering:

“China officially bans CRISPR babies, human clones and animal-human hybrids”? (another blow to attempts to project fears & fantasies onto China)

2.3 Politics/Religion

Reflecting Sunlight: Recommendations for Solar Geoengineering Research and Research Governance, National Academies 2021 (media)

“Improving Public Sector Management at Scale? Experimental Evidence on School Governance India”, Muralidharan & Singh 2020

“Jay-Z’s 99 Problems, Verse 2: A Close Reading with 4th Amendment Guidance for Cops and Perps”, Mason 2012

2.4 Psychology/Biology

“Oxylipin biosynthesis reinforces cellular senescence and allows detection of senolysis”, Wiley et al 2021

“Inside the Secret Sting Operations to Expose Celebrity Psychics”

“If I fits I sits: A citizen science investigation into illusory contour susceptibility in domestic cats (Felis silvestris catus)”, Smith et al 2021

“Cetaceans, sex and sea serpents: an analysis of the Egede accounts of a ‘most dreadful monster’ seen off the coast of Greenland in 1734”, Paxton et al 2005 (is that a legendary cryptid in your pocket, or are you just happy to see me?)

“Building the perfect curse word: A psycholinguistic investigation of the form and meaning of taboo words”, Reilly et al 2020

2.5 Technology

“How Developers Choose Names”, Feitelson et al 2021 (“Another example concerned the function ‘arrangeFilesByName(files)’. When asked the return value…one suggested the number of files reordered”)

“Bringing GNU Emacs to Native Code”, Corallo et al 2020 (using libgccjit to make Emacs 2.3× to 42× faster; gccemacs has been merged into Emacs HEAD & will be available soon)

“Hosting SQLite databases on Github Pages (or any static file hoster)” (a revolution in static website technology: eg running a query need download only 54kb of a 670MB database; fulltext site search is just the beginning of the possibilities of this clever use of range requests)

“Fontemon: World’s first video game in a font!” (a Pokemon-like CYOA implemented as an OpenType font file; play in browser or text editor—still not quite Turing-complete but definitely the most impressive thing implemented in a font so far)

Fontemon is by far the highlight of SIGBOVIK 2021; but also worth noting: “Back to Square One: Superhuman Performance in Chutes and Ladders Through Deep Neural Networks and Tree Search” · “Deep Deterministic Policy Gradient Boosted Decision Trees” · “Lowestcase and uppestcase letters: Advances in derp learning” · “openCHEAT: Computationally Helped Error bar Approximation Tool—Kick-starting Science 4.0” · “The Newcomb-Benford Law, Applied to Binary Data: An Empirical and Theoretic Analysis” · “Inverted Code Theory: Manipulating Program Entropy” (Tenet fans only—possibly inferior to Moravec 1991?) · “Build your own 8-bit busy beaver on a breadboard!”

Incidentally, it’s curious that while STEM fields have entire annual issues, journals, & conferences devoted to satire (SIGBOVIK; Arxiv April Fools papers like Garfinkel et al 2017; Special Topics; the BMJ Christmas issue; the Ig Nobel Prizes & BAHFest), after asking in several places, I have found no instances in the humanities. (I know of many entertaining papers, like Sinhababu 2008 on waifus, but no regular organized publication, with the possible exception of the annual “Latke-Hamantash Debate”.)

2.6 Economics

“The Kelly Criterion in Blackjack Sports Betting, and the Stock Market”, Thorp 2006

“The Performance Pay Nobel” (CEO pay as blackbox optimization problem)

“The Ocean’s Hot Dog: The Development of the Fish Stick”, Kelly 2008 (out of nostalgia, I bought some fish sticks for the first time in decades; better than I remembered, even if I had no tartar handy)

2.7 Philosophy

“The Aesthetics of Smelly Art”, Shiner & Kriskovets 2007; “The Odor Value Concept in the Formal Analysis of Olfactory Art”, Kraft 2019; “Perfumery as an art form”/notes, Qualia Computing 2020 (more: manufacturing: “The Scent of the Nile: Jean-Claude Ellena creates a new perfume”; human smell is better than you think: “Mechanisms of Scent-tracking in Humans”, Porter et al 2006 (video; see also “Poor Human Olfaction is a 19th Century Myth”, McGann 2017); olfactory white; Kōdō, which unexpectedly appears in Knuth. C. Thi Nguyen’s description of the more bizarre & avant-garde perfumes made me curious enough to nose around & order 39 LuckyScent samplers.)

2.8 Miscellaneous

Sarah Bernhardt (Lions. Lots of lions.)

Another thought, looking at ‘Employer Costs for Employee Compensation’ (PDF):

“Moore’s Law”: the cost of a transistor halves every ~19 months;

“Anti-Moore’s Law”: the cost of a synapse doubles every ~119 years.